Working with Cal-Adapt Climate Data in R:

Finding Data and Best Practices for Analyzing Climate Data

The Cal-Adapt API server has 11 preset areas of interest (aka boundary layers).

You can query these features without passing a spatial object!

Example:

norcal_cap <- ca_loc_aoipreset(type = "counties",

idfld = "fips",

idval = c("06105", "06049", "06089", "06015")) %>%

ca_gcm(gcms[1:4]) %>%

ca_scenario(scenarios[1:2]) %>%

ca_period("year") %>%

ca_years(start = 2040, end = 2060) %>%

ca_cvar("pr") %>%

ca_options(spatial_ag = "max")Plot an API request to verify the location:

An API request can use a simple feature data frame as the query location (point, polygon, and multipolygon).

Use sf::st_read() to import Shapefiles, geojson, KML, geopackage, ESRI geodatabases, etc.

## Reading layer `pinnacles_bnd' from data source

## `D:\GitHub\cal-adapt\caladaptr-res\docs\workshops\ca_intro_apr22\notebooks\data\pinnacles_bnd.geojson'

## using driver `GeoJSON'

## Simple feature collection with 1 feature and 5 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: -121.2455 ymin: 36.4084 xmax: -121.1012 ymax: 36.56416

## Geodetic CRS: WGS 84Crate an API request:

pin_cap <- ca_loc_sf(loc = pinnacles_bnd_sf, idfld = "UNIT_CODE") %>%

ca_slug("et_month_MIROC5_rcp45") %>%

ca_years(start = 2040, end = 2060) %>%

ca_options(spatial_ag = "mean")

plot(pin_cap, locagrid = TRUE)Fetch data:

caladaptR comes with a copy of the Cal-Adapt raster series “data catalog”.

For each raster series you can see the:

The catalog can be retrieved using ca_catalog_rs() (returns a tibble).

PRO TIP

The best way to browse the catalog is with RStudio’s View pane. You can then use the filter buttons to find the raster series you want.

ca_catalog_rs() %>% View()

To search the catalog using a keyword, you can use ca_catalog_search()

To view the properties of a specific dataset (e.g., to see the units or start/end dates), search on the slug:

##

## snowfall_day_livneh_vic## name: Livneh VIC daily snowfall## url: https://api.cal-adapt.org/api/series/snowfall_day_livneh_vic/## tres: daily## begin: 1950-01-01T00:00:00Z## end: 2013-12-31T00:00:00Z## units: mm/day## num_rast: 1## id: 519## xmin: -124.5625## xmax: -113.375## ymin: 31.5625## ymax: 43.75

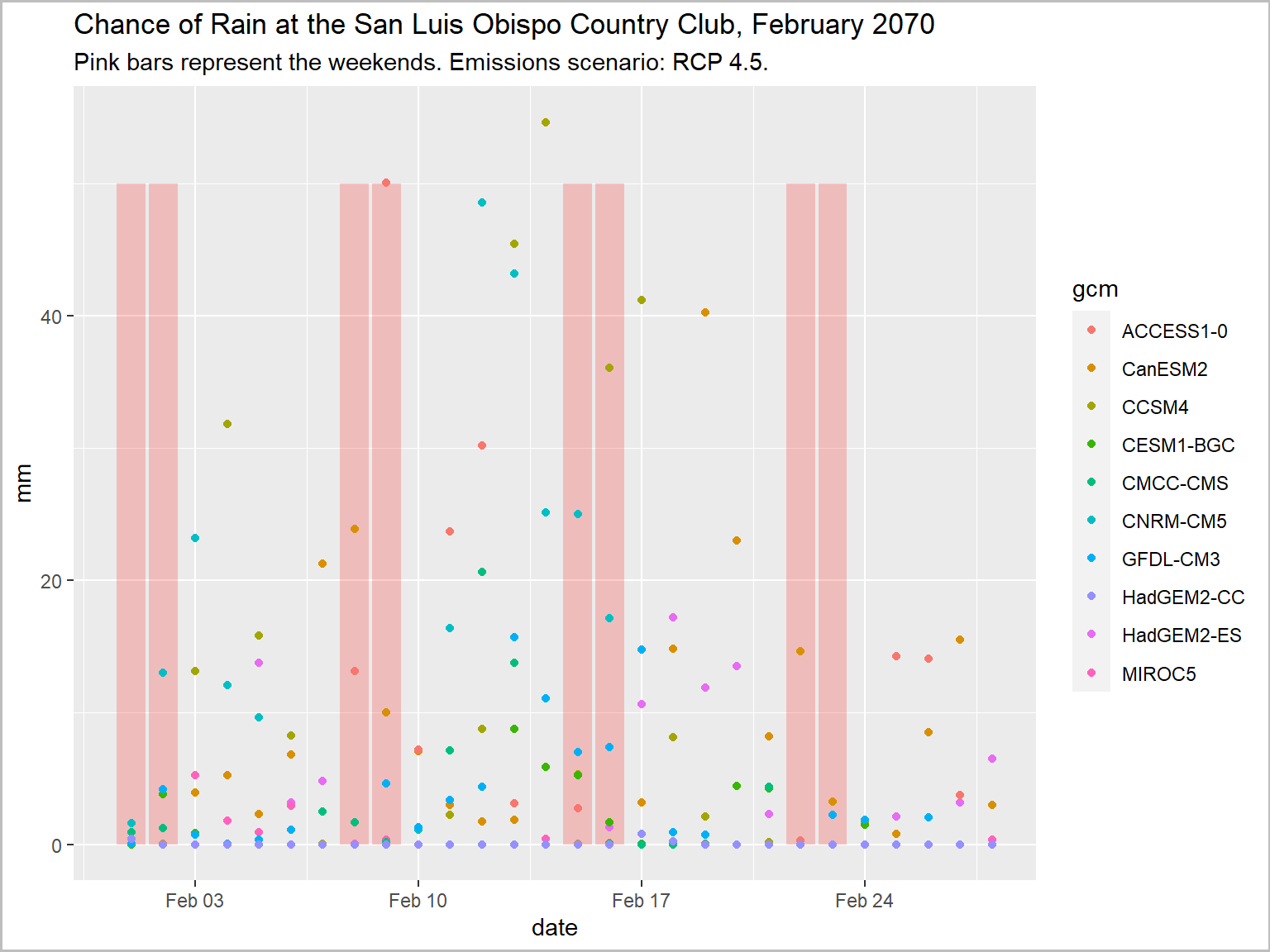

Is this a wise or unwise use of climate data?

library(caladaptr)

library(dplyr)

library(ggplot2)

library(units)

slo_cap <- ca_loc_pt(coords = c(-120.6276, 35.2130)) %>%

ca_cvar("pr") %>%

ca_gcm(gcms[1:10]) %>%

ca_scenario("rcp45") %>%

ca_period("day") %>%

ca_dates(start = "2070-02-01", end = "2070-02-28")

slo_mmday_tbl <- slo_cap %>%

ca_getvals_tbl(quiet = TRUE) %>%

mutate(pr_mmday = set_units(as.numeric(val) * 86400, mm/day))

feb2070_weekends_df <- data.frame(dt = as.Date("2070-02-01") + c(0,1,7,8,14,15,21,22), y = 50)

ggplot(data = slo_mmday_tbl, aes(x = as.Date(dt), y = as.numeric(pr_mmday))) +

geom_col(data = feb2070_weekends_df, aes(x = dt, y = y), fill = "red", alpha = 0.2) +

geom_point(aes(color=gcm)) +

labs(title = "Chance of Rain at the San Luis Obispo Country Club, February 2070",

subtitle = "Pink bars represent the weekends. Emissions scenario: RCP 4.5.",

x = "date", y = "mm") +

theme(plot.caption = element_text(hjust = 0))

Climate models are designed to capture trends in climate.

By definition, climate is weather averaged over 30+ years.

⇒ If you’re not averaging at least 20-30 years of data, you’re probably doing something wrong.

There is variability among models.

The biggest source of uncertainty (by far) is the future of emissions.

Both are important.

Variability can be within and/or between models.

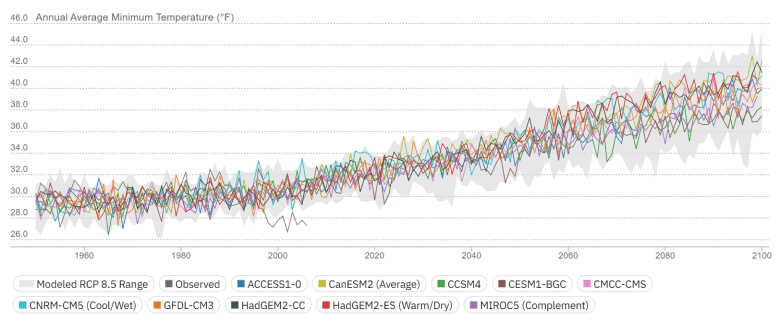

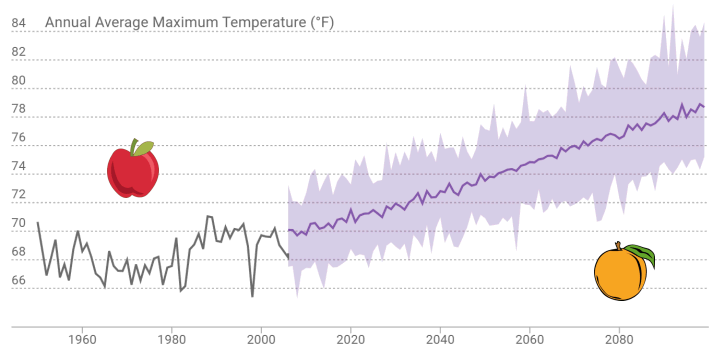

Where can I go right now to see the climate that my city will have 50 years from now?

What is the appropriate historic baseline for modeled future climate scenarios?

Observed historic record and modeled future - different animals

Model historic climate and modeled future - comparable

How do we make sense of multiple climate futures?

How do we store data for multiple futures?

Individual time series are not that helpful by themselves.

What is the right order of operations for computing metrics, aggregating data, and doing comparisons?

Source Data (example):

That’s 365 * 30 * 10 * 3 = 328,500 values of tasmax, for each pixel in the county!

In what order should we

| Operation | Example | R functions |

|

Madera County, Census tracts, etc. | build into API request |

|

2070-2099 | build into API request |

|

Sep - June |

dplyr::filter()

|

|

‘extreme heat’ day |

dplyr::mutate()

|

|

RCP |

dplyr::group_by()

|

|

water year |

dplyr::group_by()

|

|

GCM |

dplyr::group_by()

|

|

heat spell = extreme heat for at least 3 days |

rle()

|

|

avg # heat spells per GCM (all date slices combined) |

dplyr::summarise()

|

|

total # heat spells per water year |

dplyr::summarise()

|

|

avg # heat spells per emissions scenario (all GCMs combined) |

dplyr::summarise()

|

|

compare across scenarios, locations | plots, tables, stats tests |

Cal-Adapt data always comes down in a “long” format:

ca_loc_pt(coords = c(-117.0, 33.1)) %>%

ca_cvar(c("tasmax", "tasmin")) %>%

ca_gcm(gcms[1:4]) %>%

ca_scenario(scenarios[1:2]) %>%

ca_period("year") %>%

ca_years(start = 2040, end = 2060) %>%

ca_getvals_tbl(quiet = TRUE) %>%

head()

To make useful plots, maps, and summaries, you often have to ‘munge’ or ‘wrangle’ the data, which may include:

Fortunately you have a very robust toolbox:

[cheatsheet] |

[cheatsheet] |

[cheatsheet] |

[cheatsheet] |

PRO TIP

Qtn. How do I know what data wrangling is needed?

Ans. Work backward from your analysis or visualization functions.

In Notebook 2, you will: