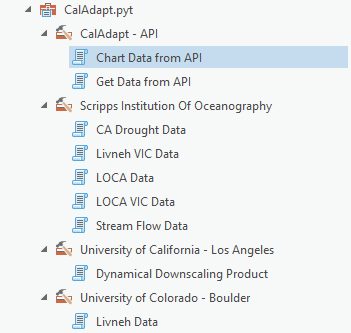

Working with Climate Data in R with caladaptR

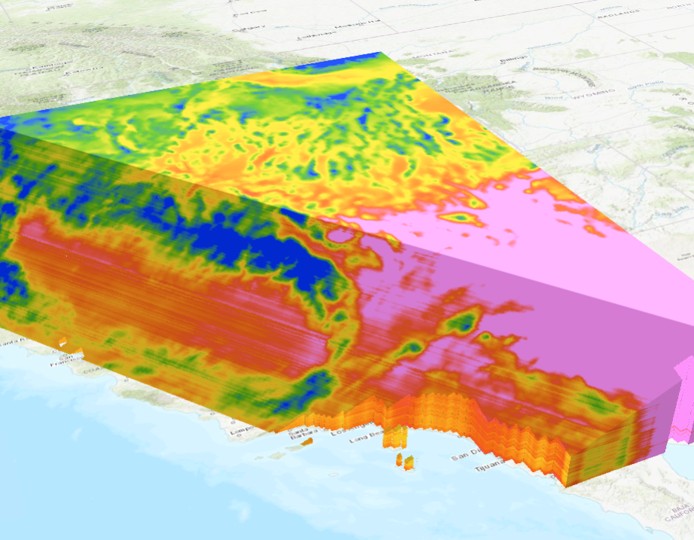

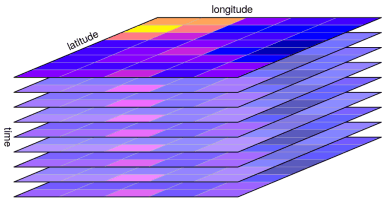

Large Queries and Rasters

Imagine you want to extract the climate data for 36,000 vernal pool locations.

Issues that arise when querying large number (1000s) of locations:

1) Aggregate point features by LOCA grid cells

2) Download rasters

ca_getrst_stars()3) Save values in a local SQLite database

ca_getvals_db()

Use ca_getvals_db() Instead of ca_getvals_tbl()

Sample usage:

my_vals <- my_api_req %>%

ca_getvals_db(db_fn = "my_data.sqlite",

db_tbl = "daily_vals",

new_recs_only = TRUE)new_recs_only = TRUE → will pick up where it left off if the connection interrupted

ca_getvals_db() returns a ‘remote tibble’ linked to a local database

Work with ‘remote tibbles’ using many of the same techniques as regular tibbles (with a few exceptions)

ca_db_info() and ca_db_indices() help you view and manage database files

See the Large Queries Vignette for details

cap1_tifs <- cap1 %>% ca_getrst_stars(out_dir = “c:/data/tifs”)

ca_read_stars()

See also Raster Vignettes

In Notebook 4 you will:

https://ucanr-igis.github.io/caladapt-py/