IGIS Tech Notes describe workflows and techniques for using geospatial science and technologies in research and extension. They are works in progress, and we welcome feedback and comments.

Background

The Remote Automatic Weather Stations (RAWS) network consists of nearly 2,200 weather stations located strategically throughout the United States. Maintained through a partnership of several agencies, the stations provide weather data for a variety of projects including rating fire danger and monitoring air quality.

RAWS units collect, store, and forward data to a computer system at the National Interagency Fire Center (NIFC) in Boise, Idaho. There is no known public API for accessing the data1,2, so importing the data involves going to a website such as Western Regional Climate Center or WXx Weather Stations, entering the parameters for your request in a webform (e.g., station, date range, variables), generating the results, either as a table or CSV file.

Importing RAWS Data from the Western Regional Climate Center

The rest of this Tech Note will demonstrate how to import RAWS data downloaded from the Western Regional Climate Center. This is not a modern data portal however and the download process can not be automated3.

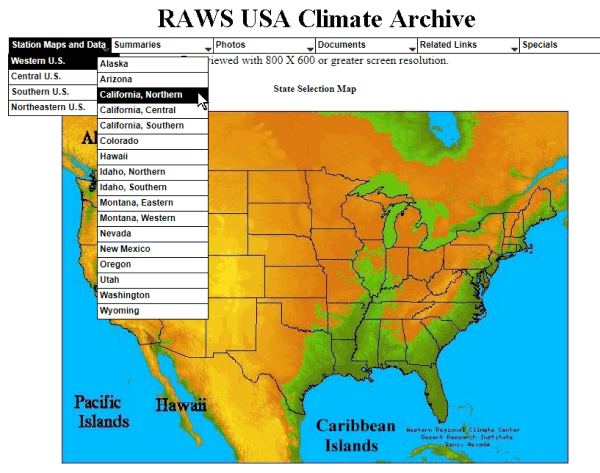

The first step is to find your station of interest. Go to Western Regional Climate Center and select the region of interest:

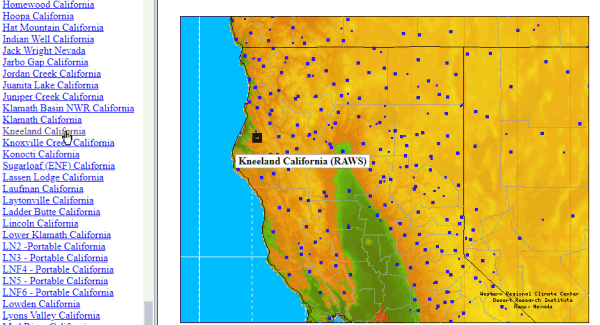

Next, select a station from list and click on its name to go to the data page for that station:

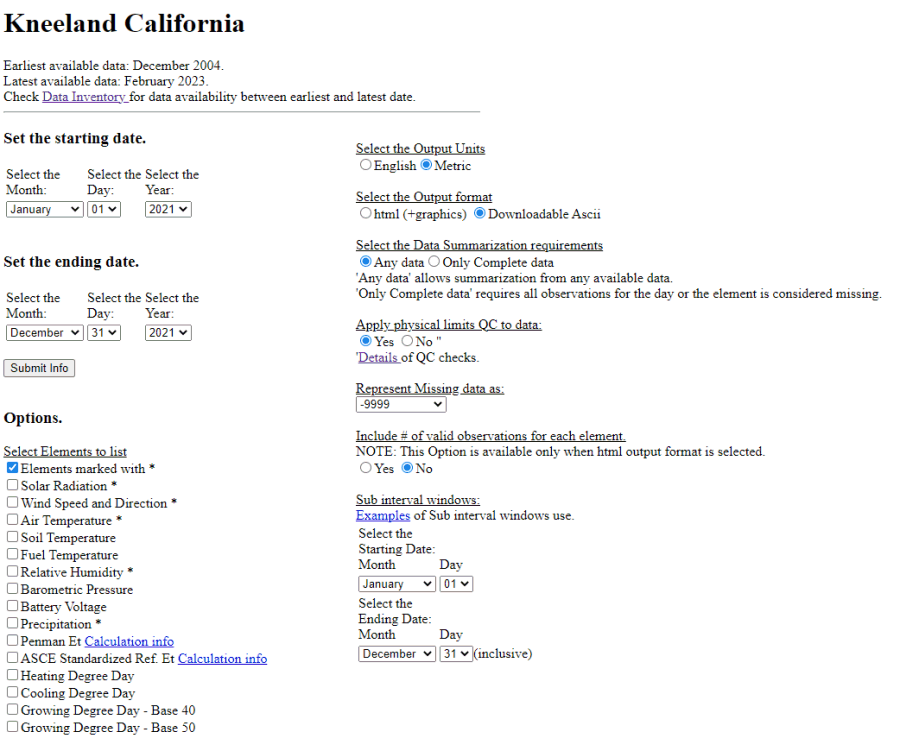

For example to get to the data download page for Kneeland, CA, click here. To download data for a range of dates, select the ‘Daily Summary Time Series’ link, enter your start and end dates, and the variables you want:

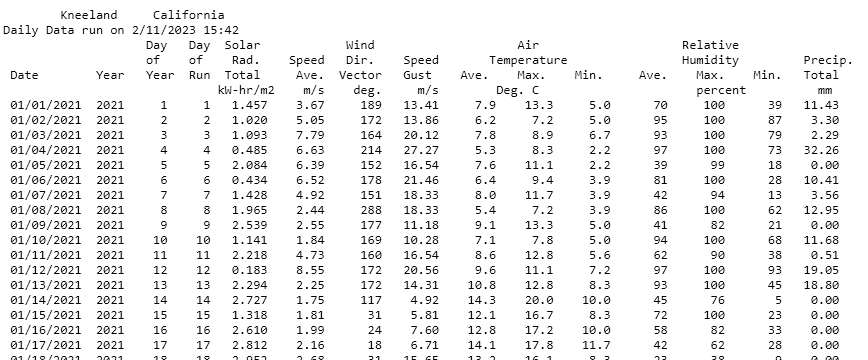

Click ‘Submit Info’ and in a few seconds the data will appear as an ASCII table with fixed columns.

Copy-paste the table into a text editor, and save it as a new text file. You are now ready to import it into R.

Import the RAWS Text File into R

The text file you copied from the web browser above is not a clean CSV, but it can still be imported into R fairly easily. The code below demonstrates how to import a table generated by the screenshot above. Specifically:

- one year of daily summary data (in this case from the Kneeland

station)

- metric units

- the default set of weather variables (as shown above)

You can download the file we’ll be importing below here. If you download a different set of variables you’ll have to make adjustments to the code below.

First we load a couple of packages and find the file:

library(readr)

## Define the file location and make sure it exists

kland_fn <- "kland_daily-sum_2021.txt"; file.exists(kland_fn)## [1] TRUE

We’ll also get the total number of lines in the file (which we’ll need below):

(num_lines <- kland_fn |> readLines(warn = FALSE) |> length())## [1] 373

Next, we define the column names manually (because this isn’t a CSV file). Of course if you selected different variables when you asked for the data, your column names will be different.

col_names <- c("date", "year", "yday", "run_day", "solrad_total",

"windspeed_avg", "winddir_avg", "windgust_max",

"tmean", "tmax", "tmin",

"rh_mean", "rh_max", "rh_min", "pr")

Now we’re ready to import. Note below that we’re using

read_table with arguments to skip the first 6 lines of the

text file, as well as the last two lines (which are a copyright

note).

kland_tbl <- read_table(kland_fn,

col_names = col_names,

skip = 6,

n_max = num_lines - 8,

col_types = cols(

date = col_date(format = "%m/%d/%Y"),

year = col_integer(),

yday = col_integer(),

run_day = col_integer(),

solrad_total = col_double(),

windspeed_avg = col_double(),

winddir_avg = col_double(),

windgust_max = col_double(),

tmean = col_double(),

tmax = col_double(),

tmin = col_double(),

rh_mean = col_double(),

rh_max = col_double(),

rh_min = col_double(),

pr = col_double())

)

Inspect the dimensions, head, and tail to make sure we got all the dates and the columns were given the correct data types:

dim(kland_tbl)## [1] 365 15head(kland_tbl)## # A tibble: 6 × 15

## date year yday run_day solrad_to…¹ winds…² windd…³ windg…⁴ tmean tmax

## <date> <int> <int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 2021-01-01 2021 1 1 1.46 3.67 189 13.4 7.9 13.3

## 2 2021-01-02 2021 2 2 1.02 5.05 172 13.9 6.2 7.2

## 3 2021-01-03 2021 3 3 1.09 7.79 164 20.1 7.8 8.9

## 4 2021-01-04 2021 4 4 0.485 6.63 214 27.3 5.3 8.3

## 5 2021-01-05 2021 5 5 2.08 6.39 152 16.5 7.6 11.1

## 6 2021-01-06 2021 6 6 0.434 6.52 178 21.5 6.4 9.4

## # … with 5 more variables: tmin <dbl>, rh_mean <dbl>, rh_max <dbl>,

## # rh_min <dbl>, pr <dbl>, and abbreviated variable names ¹solrad_total,

## # ²windspeed_avg, ³winddir_avg, ⁴windgust_maxtail(kland_tbl)## # A tibble: 6 × 15

## date year yday run_day solrad_to…¹ winds…² windd…³ windg…⁴ tmean tmax

## <date> <int> <int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 2021-12-26 2021 360 360 0.642 4.73 243 13.4 -1.1 0

## 2 2021-12-27 2021 361 361 1.42 2.61 306 11.6 -2.1 -0.6

## 3 2021-12-28 2021 362 362 1.11 3.13 187 7.6 0 1.7

## 4 2021-12-29 2021 363 363 2.16 1.56 12 4.47 1.8 5

## 5 2021-12-30 2021 364 364 1.69 1.77 230 6.26 1.1 5

## 6 2021-12-31 2021 365 365 1.56 2.07 11 8.05 0.4 2.8

## # … with 5 more variables: tmin <dbl>, rh_mean <dbl>, rh_max <dbl>,

## # rh_min <dbl>, pr <dbl>, and abbreviated variable names ¹solrad_total,

## # ²windspeed_avg, ³winddir_avg, ⁴windgust_max

Assign Units

We could stop here, but if you want you can assign units for most of the columns.

library(dplyr)##

## Attaching package: 'dplyr'## The following objects are masked from 'package:stats':

##

## filter, lag## The following objects are masked from 'package:base':

##

## intersect, setdiff, setequal, unionlibrary(units)## udunits database from C:/Users/Andy/AppData/Local/R/win-library/4.2/units/share/udunits/udunits2.xmlkland2_tbl <- kland_tbl |>

mutate(windspeed_avg = set_units(windspeed_avg, m/s),

winddir_avg = set_units(winddir_avg, arc_degree),

windgust_max = set_units(windgust_max, m/s),

tmean = set_units(tmean, degC),

tmax = set_units(tmax, degC),

tmin = set_units(tmin, degC),

rh_mean = set_units(rh_mean, percent),

rh_max = set_units(rh_max, percent),

rh_min = set_units(rh_min, percent),

pr = set_units(pr, mm))

Inspect:

head(kland2_tbl)## # A tibble: 6 × 15

## date year yday run_day solrad_to…¹ winds…² windd…³ windg…⁴ tmean tmax

## <date> <int> <int> <int> <dbl> [m/s] [°] [m/s] [°C] [°C]

## 1 2021-01-01 2021 1 1 1.46 3.67 189 13.4 7.9 13.3

## 2 2021-01-02 2021 2 2 1.02 5.05 172 13.9 6.2 7.2

## 3 2021-01-03 2021 3 3 1.09 7.79 164 20.1 7.8 8.9

## 4 2021-01-04 2021 4 4 0.485 6.63 214 27.3 5.3 8.3

## 5 2021-01-05 2021 5 5 2.08 6.39 152 16.5 7.6 11.1

## 6 2021-01-06 2021 6 6 0.434 6.52 178 21.5 6.4 9.4

## # … with 5 more variables: tmin [°C], rh_mean [percent], rh_max [percent],

## # rh_min [percent], pr [mm], and abbreviated variable names ¹solrad_total,

## # ²windspeed_avg, ³winddir_avg, ⁴windgust_max

Summary

The RAWS network is a good source of weather data in remote, forested areas, where there may not be many alternatives. However because it is an old system and the data backend hasn’t been updated to a modern API (yet!), accessing the data requires going to a website and manually querying the data. However if you do that thoughtfully and systematically, importing the results into R can be done programmatically fairly easily with standard R packages.

You can try RAWSmet, which is a R package developed by Jonathan Callahan which is designed to import RAWS data into R by downloading and parsing files from the Climate, Ecosystem and Fire Applications (CEFA) program at the Desert Research Institute. In our testing, this worked but we were only able to download data through 2017 for our station of interest. Hence we went back to manually downloading data from the website.↩︎

RAWS data is available through the MesoNet API from Synoptic.↩︎

Actually querying data from the web form could probably be automated with web-scraping methods in combination with a virtual browser using a package like RSelenium. It would be a lot of work though, and only worth the effort if you needed to query a lot of data.↩︎

This work was supported by the USDA - National Institute of Food and Agriculture (Hatch Project 1015742; Powers).